Grad Final Project

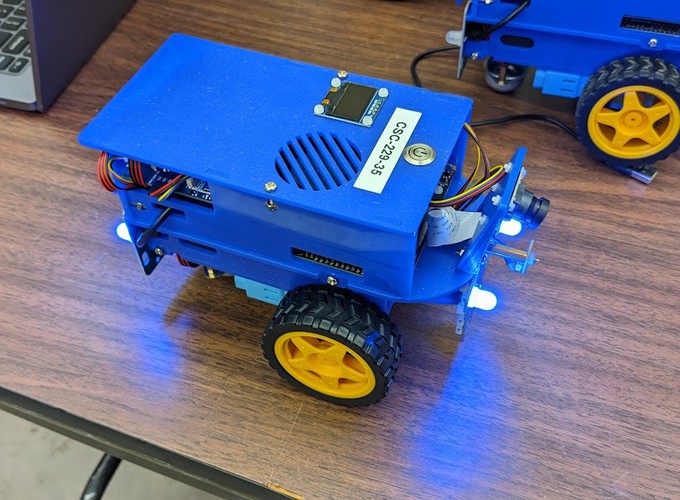

This is my final grad project for CMPUT-503, it’s an extension of lab exercise 5 where we used a machine learning model to predict single digit numbers that was trained on MNIST. For this project I decided to run the model directly on the duckiebot as our previous lab exercise ran the model on a laptop that was getting data from the duckiebot.

Motivation

In lab exercise 5 we were running a ML model on our laptop as even the smallest ML framework library were too big to fit onto the duckiebot. However, this communication overhead lead to some performance issues as network congestion lead to poor inference performance and system lag as data packets where being dropped and being forced to re-send. Running the model locally on the duckiebot should avoid this overhead as all communication is being done locally on the robot.

Model Training Pipeline

Installing TensorFlow Lite or any other lightweight ML framework is difficult as the library package is too big to run on the duckiebot itself. One could implement their own feed-forward and backpropagation module for the duckiebot but that would be too time-consuming and difficult. Instead, I trained a Multi-Layer Perceptron (MLP) model using Pytorch on a machine with GPU-compute and transferred the weights to the duckiebot to run in inference mode. This way I only need to implement the inference portion for the duckiebot which for a simple MLP model is trivial to do.

Model

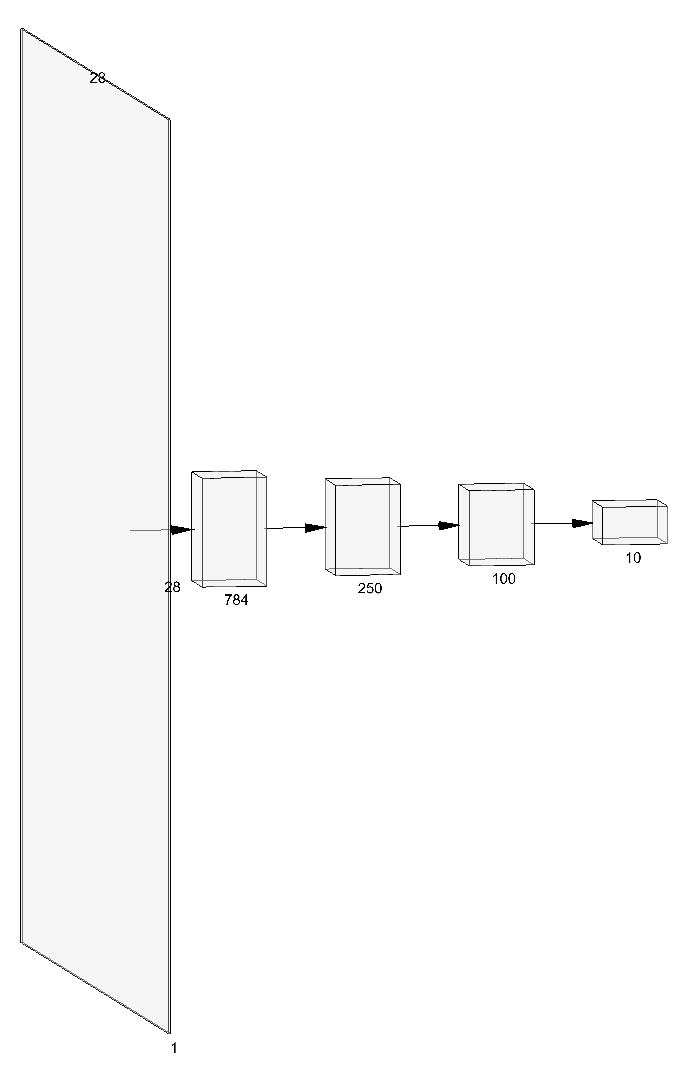

The model is a relatively simple 4-layer, fully connected model that has 784 inputs from a flatten 28 by 28 gray-scale image with 10 outputs, each representing the prediction strength of a digit. Between each layer there is a ReLU activation function, and a dropout layer set to 10% to help with over-fitting.

The diagram below shows the model architecture:

Loss function and Optimizer

Cross-Entropy loss was used as the loss function as this was a classification task on predicting the correct digit or label. The Pytorch Adam optimizer was used as it showed good convergence performance for training and validation.

Dataset

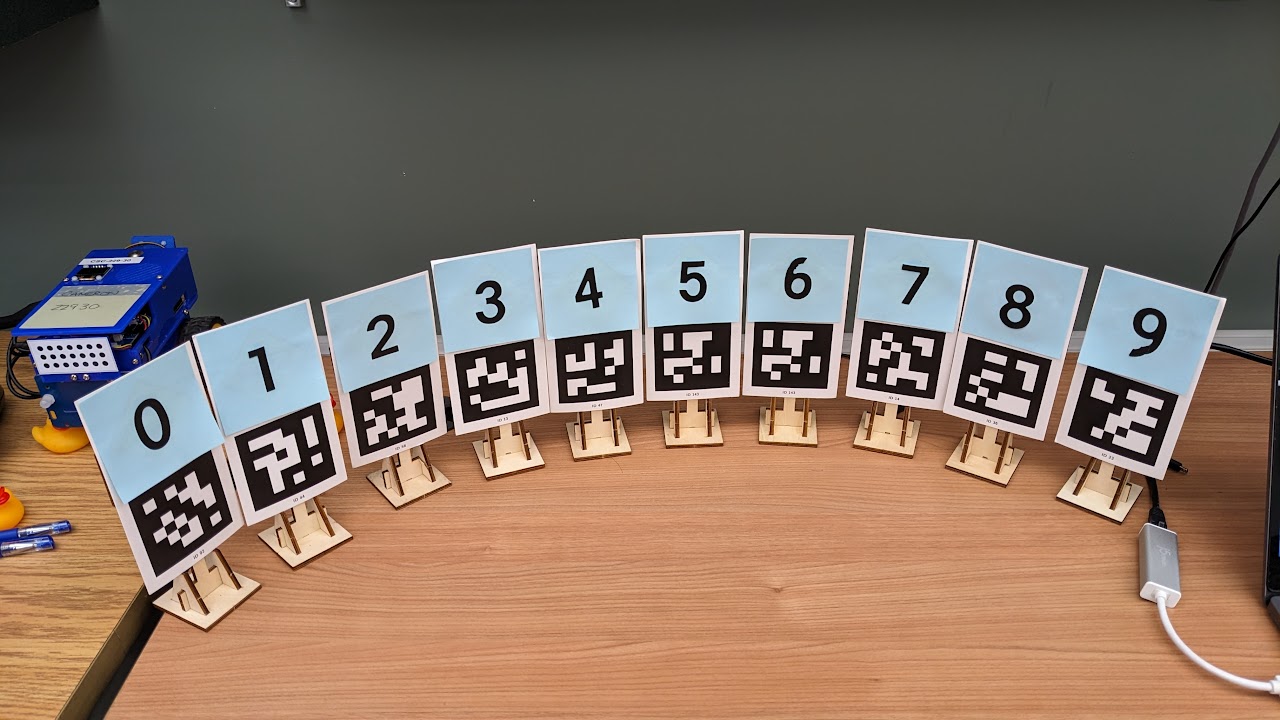

The MNIST training dataset was used to train the model, with a split ratio of 90:10 for training and validation respectively. The MNIST test dataset was used to verify that the model will work however, the actual test number used for the experiment was completely different.

Below is a image of the test number used for the experiment:

Training

The model was trained for 10 epoch and had a test loss of 0.066 and a test accuracy of 97.99%. The weights that performed the best were exported to the duckiebot for the experiment.

Inference Pipeline

To use the model on the robot the image data from the camera must first be pre-processed so that only the grey-scaled number segment is passed to the model.

To do this the raw image is first converted to a HSV color model.

Then the raw image is processed by a mask that only allows the color of the post-it note to pass through anything else is converted to 0,0,0.

Then using OpenCV finds the largest, non-black contour in the image, this is done to mitigate noise from any background object that has a similar color to the post-it note. It also checks the area of the contour to see if it’s big enough to be a post-it note.

Next, a bounding box is put around the contour to extract it from the image, this extracted image goes through another mask to convert the image into a grey-scale where the number if white and the background is black, similar to the training data.

Next the grey-scaled image is cropped by 15% to remove noise from the edge then resized to the training data’s 28 by 28 resolution.

Finally, the image is flattened then processed by the model.

The predicted number is the index with the highest value generated by the model.

Representing the model

The weights of the model are stored as a dictionary of numpy arrays. Moreover, the dictionary is constructed such that when it is iterated upon using python its order matches the flow of data during training. Therefore, it’s easy to implement a simple, fully connected model with simple activation functions in python using numpy arrays.

The code segment below shows the inference only numpy model used for the experiment:

class NP_model():

# Only supports MLP linear models

def __init__(self, model_weight):

self.weights = model_weight

def show_dims(self):

for key, value in self.weights.items():

print(key, value.shape)

def predict(self, x):

temp = x

for key, value in self.weights.items():

if "weight" in key:

temp = np.matmul(temp, value.transpose())

elif "bias" in key:

temp = temp + value

temp = np.maximum(temp, 0)

return temp

Video demo

The video below shows our model that is running on the duckiebot in action.

- The green bounding box shows the detected region of the post-it note that contains a number.

- The cyan number above the bounding box shows the predicted model generated by the model.

- The yellow time on the right of the predicted number, shows the total time it took the system to predict a number.

Repo Link

References

- Exercise 5 the code is based on: https://github.com/jihoonog/CMPUT-503-Exercise-5

- Exporting weights as numpy: https://discuss.pytorch.org/t/create-a-custom-predict-function/147285